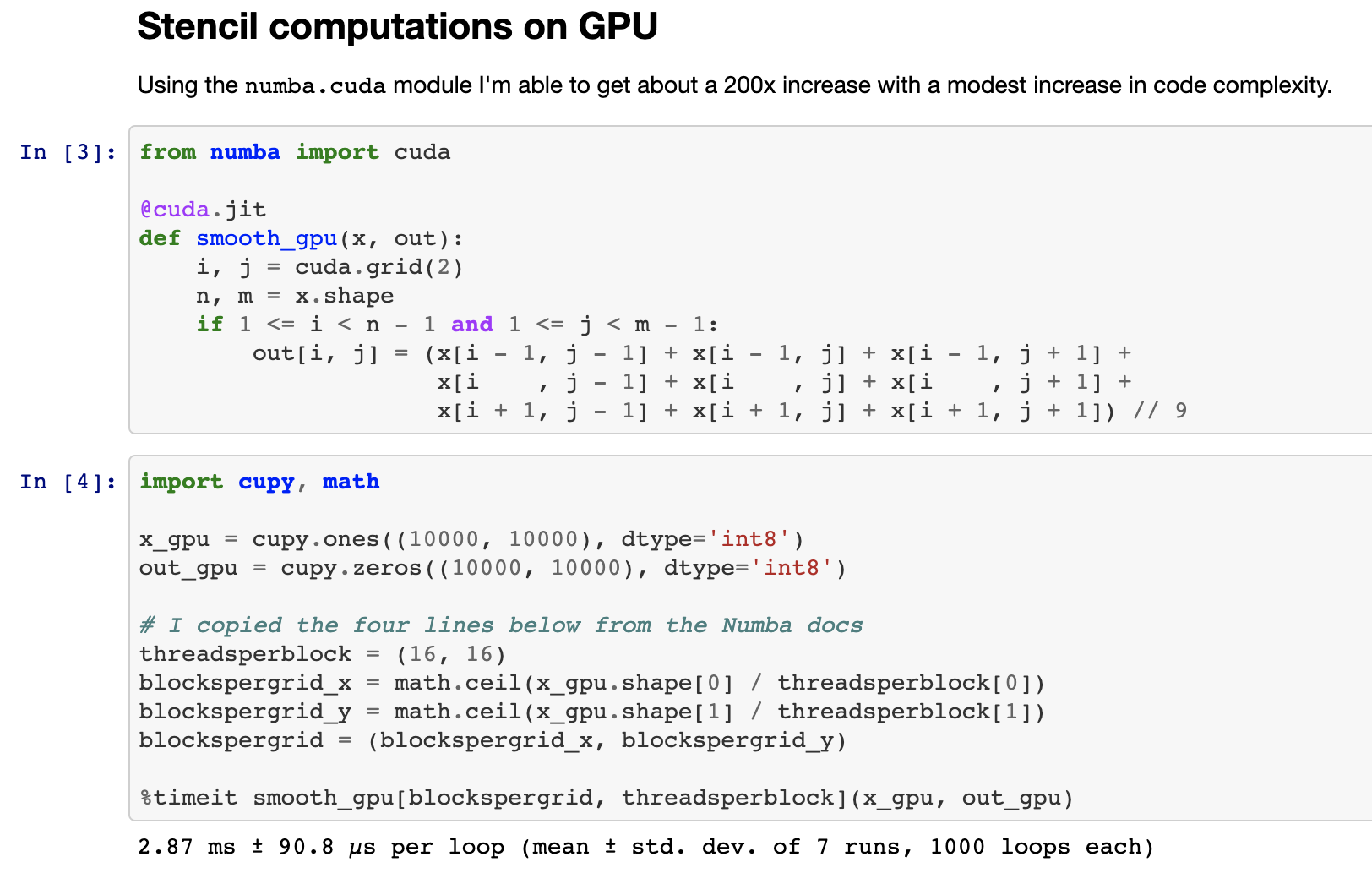

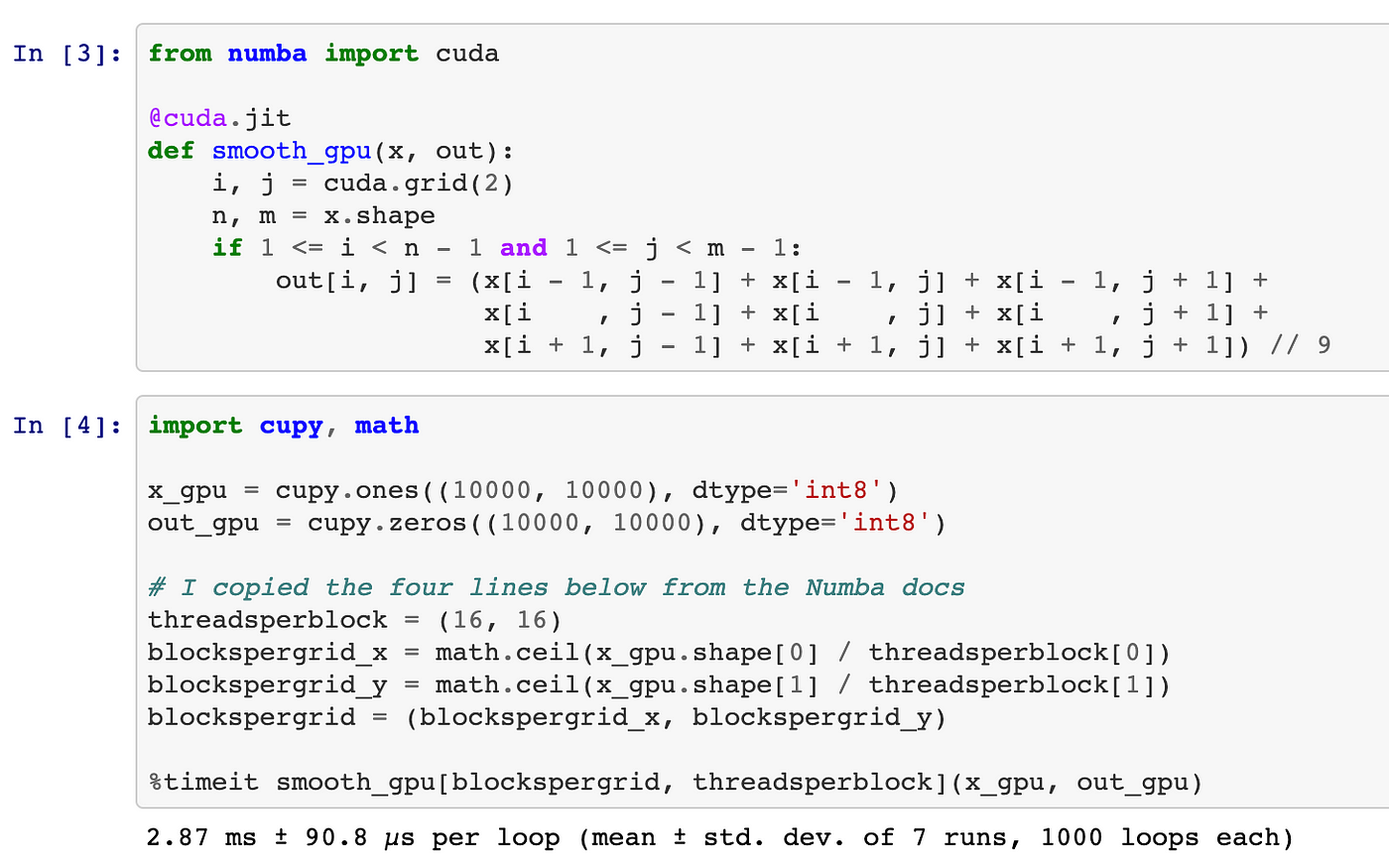

Python, Performance, and GPUs. A status update for using GPU… | by Matthew Rocklin | Towards Data Science

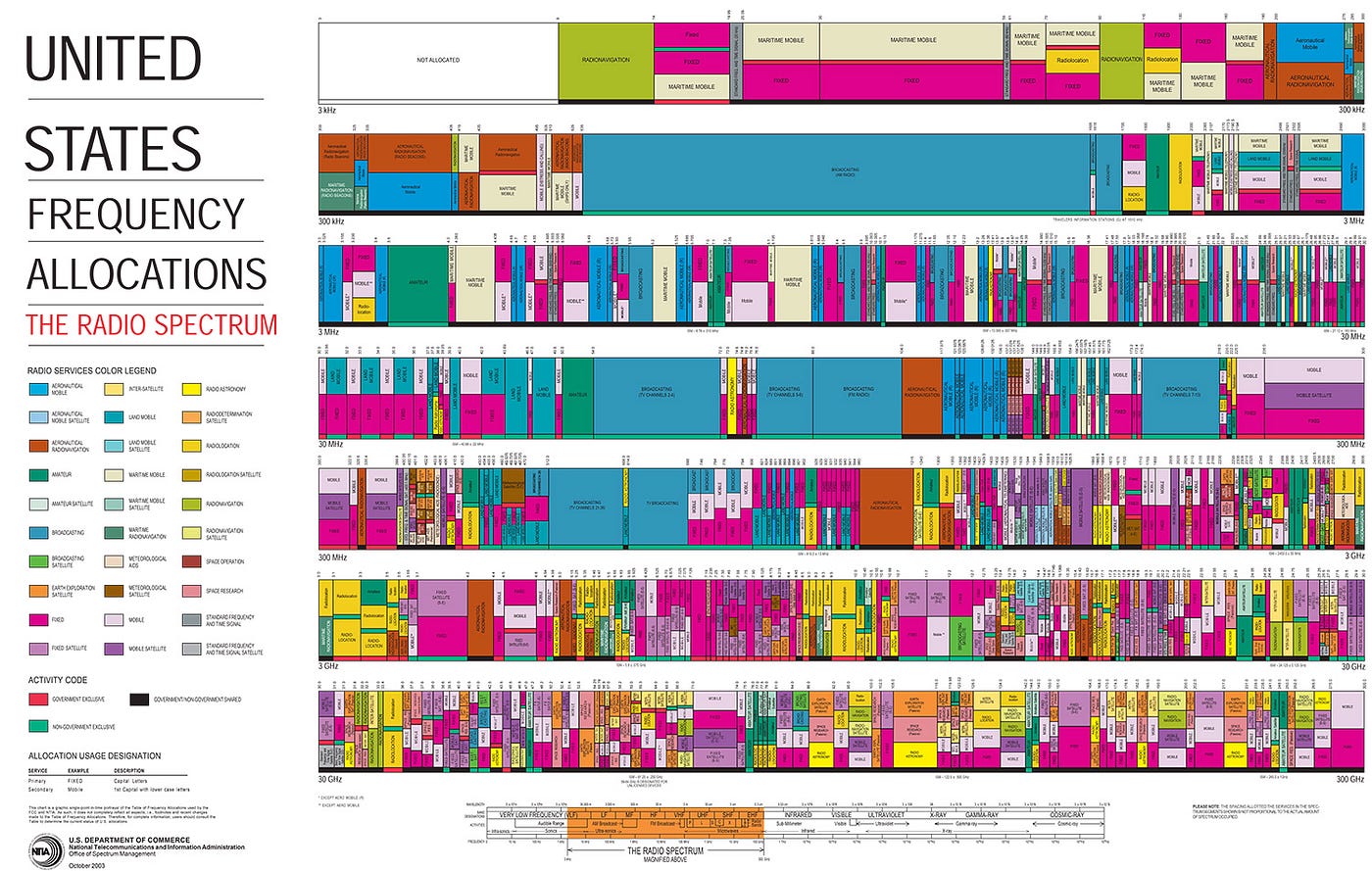

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

Beyond CUDA: GPU Accelerated Python for Machine Learning on Cross-Vendor Graphics Cards Made Simple | by Alejandro Saucedo | Towards Data Science

GitHub - meghshukla/CUDA-Python-GPU-Acceleration-MaximumLikelihood-RelaxationLabelling: GUI implementation with CUDA kernels and Numba to facilitate parallel execution of Maximum Likelihood and Relaxation Labelling algorithms in Python 3

A Complete Introduction to GPU Programming With Practical Examples in CUDA and Python - Cherry Servers

An Introduction to GPU Accelerated Graph Processing in Python - Data Science of the Day - NVIDIA Developer Forums